The Rise of Agentic AI: From Conversational Models to Autonomous Systems

Discover how AI is evolving from chatbots to autonomous agents, and how Agentic AI is reshaping workflows through planning, tool use, and feedback loops.

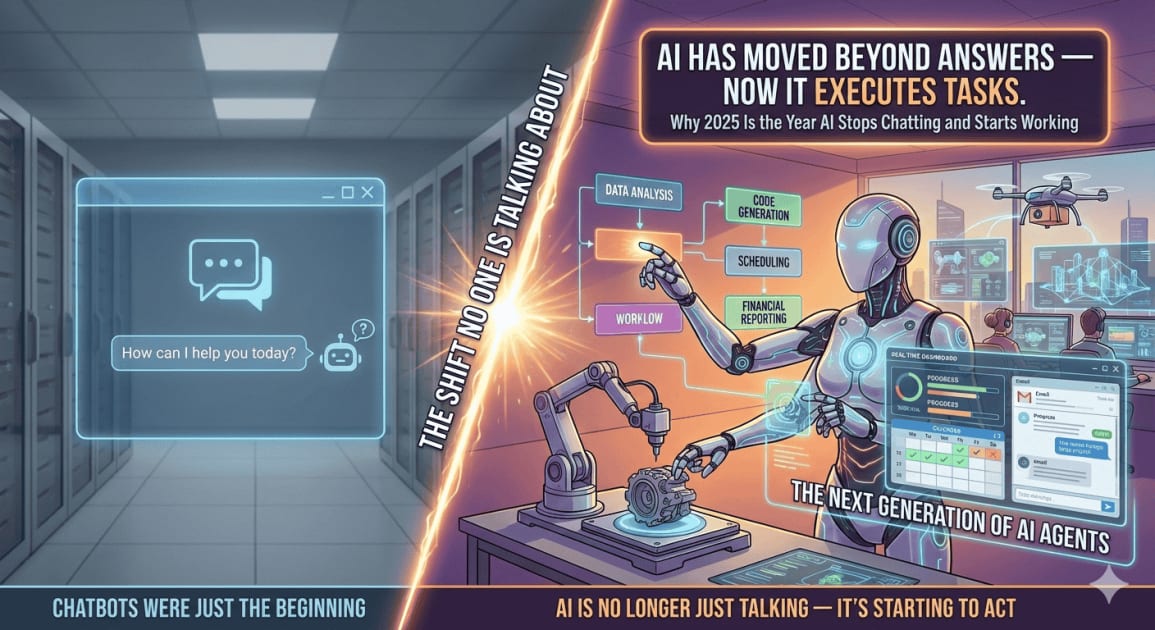

Back in 2023, tools like ChatGPT felt revolutionary. You asked a question, and an AI responded with a surprisingly good answer. For many people, that was their first real interaction with advanced AI.

But those systems had a clear limitation: they could talk, but they couldn’t act.

They generated text, not outcomes.

Today, that boundary is shifting. The industry is moving away from purely conversational AI toward Agentic AI—systems designed to carry out tasks, interact with tools, and operate across multi-step workflows under human supervision.

This is not just “AI getting better.” It is a change in how AI is designed to be used.

The Limitation of the Chatbot Model

Traditional chatbots follow a simple pattern:

Prompt → Response → Stop

They do not remember long-term context, cannot interact directly with software systems, and cannot verify whether their output actually worked.

This makes them useful for:

- Answering questions

- Drafting text

- Explaining concepts

But ineffective for:

- Executing tasks

- Managing workflows

- Iterating on results

The limitation is architectural, not intelligence-related.

What Is an AI Agent? (A Practical Explanation)

An AI agent is a system built around a large language model (LLM) that is extended with:

- Tool access

- Task memory

- Execution control

- Feedback mechanisms

A common way to describe this is:

An LLM provides reasoning, while the agent framework provides action.

The model is no longer just generating language—it is participating in a goal-oriented process.

The Core Mechanism: Plan–Act–Reflect

Most modern agent systems use a structured loop, often described as plan–act–reflect (or similar variants).

Plan (Reasoning Phase)

The agent breaks a high-level goal into smaller, manageable steps.

Example: “Create a website” becomes design → structure → styling → deployment.

Act (Tool Execution)

The agent interacts with approved tools:

- APIs

- Databases

- Code execution environments

- Internal services

These actions happen inside controlled and permissioned systems, not the open internet.

Reflect (Evaluation and Adjustment)

The agent checks outcomes against expectations:

- Did the code run?

- Did the query return valid data?

- Did the output meet constraints?

If not, the agent revises its approach and repeats the cycle.

This loop enables iteration, not autonomy without limits.

Tool Use: What “Function Calling” Really Means

In technical terms, agent tool use is implemented through function calling or tool invocation.

This means:

- The AI selects from predefined functions

- Each function has strict inputs and outputs

- Execution is sandboxed and monitored

For example:

- Sending an email requires explicit API permission

- Running code happens in an isolated environment

- Database access is scoped and logged

Agents do not “decide” to act freely—they operate within engineered boundaries.

Memory: Short-Term Context vs Long-Term Storage

Chatbots are limited by a context window, which acts like short-term memory.

Agents extend this with external memory systems, often implemented using:

- Vector databases

- Structured logs

- Task summaries

This allows agents to:

- Recall previous decisions

- Maintain consistency across sessions

- Adapt behavior based on stored information

This is engineered memory, not learning in the human sense.

Where AI Agents Are Used Today

As of now, agentic systems are most effective in structured environments:

- Software development (testing, refactoring, deployment pipelines)

- Enterprise operations (reporting, monitoring, workflow automation)

- Customer support (triage, retrieval, drafting responses)

- Research assistance (information gathering and summarization)

In nearly all real deployments, agents operate with human-in-the-loop or human-on-the-loop oversight.

The Critical Safeguard: Human-in-the-Loop

Because agents can perform real actions, organizations do not allow unrestricted autonomy.

Human oversight ensures:

- Errors are caught

- Sensitive actions are approved

- Accountability remains clear

A useful analogy:

Humans define goals and boundaries.

Agents handle execution within those boundaries.

Conclusion: From Information to Execution

Chatbots changed how people access information.

AI agents are changing how work gets executed.

The shift is not about replacing people, but about moving repetitive, structured tasks into systems that can operate continuously, consistently, and at scale.

The important question is no longer:

“What can I ask the AI?”

It is now:

“What parts of this workflow can AI safely and reliably handle?”

That distinction defines the agentic era.